-

Latest Version

MySQL Workbench 8.0.42 LATEST

-

Review by

-

Operating System

Windows 10 (64-bit) / Windows 11

-

User Rating

Click to vote -

Author / Product

-

Filename

mysql-workbench-community-8.0.42-winx64.msi

Developed by Oracle, it provides database administrators, developers, and data architects with a unified visual platform to design, develop, and manage MySQL databases.

MySQL Workbench Desktop for Windows is available in both Community (FREE) and Commercial editions, offering a range of features for database modeling, SQL development, administration, and performance tuning.

Key Features

Database Modeling and Design – Allows users to create and modify ER diagrams, forward and reverse engineer schemas, and visually design database structures.

SQL Development – Offers an SQL editor with syntax highlighting, auto-completion, and query execution tools.

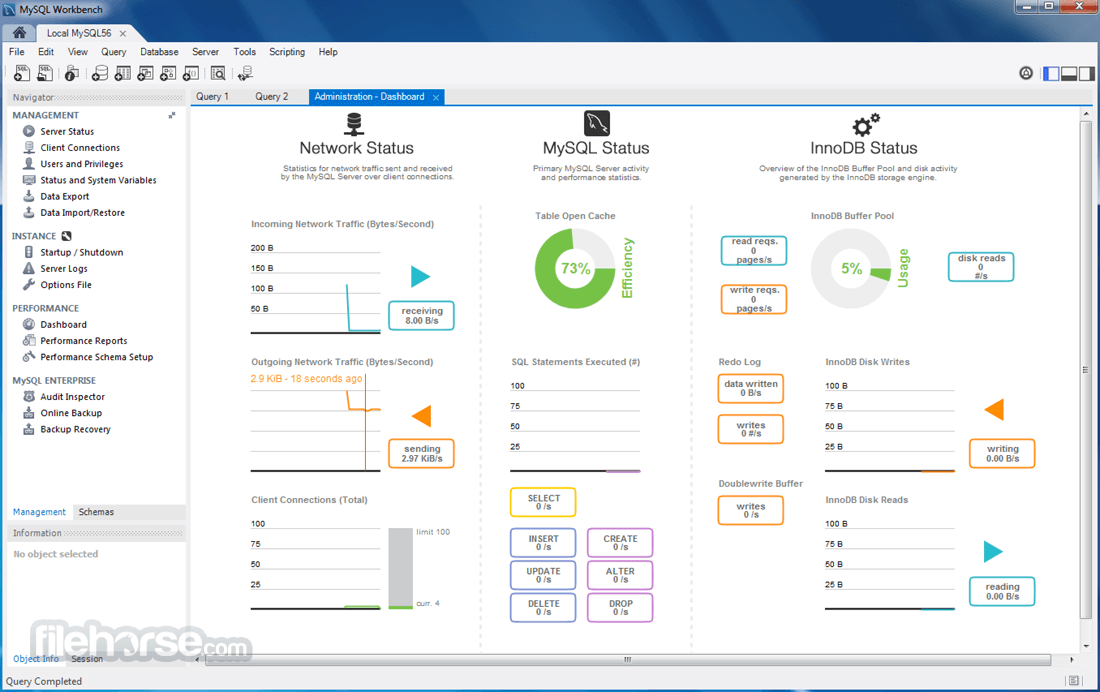

Database Administration – Provides features for managing users, privileges, server configuration, logs, and backup management.

Performance Monitoring and Tuning – Includes built-in tools for database performance analysis, query optimization, and debugging.

Data Migration – Supports migration from various databases such as Microsoft SQL Server, PostgreSQL, and SQLite to MySQL.

User Interface

The interface of MySQL Workbench is clean and well-organized, with three main panels:

Navigator Panel – Lists database objects such as schemas, tables, and views.

SQL Editor Panel – Allows users to write and execute SQL queries with syntax highlighting and auto-completion.

Results Grid – Displays query execution results in a tabular format, with export options for reports.

While the UI is feature-rich, beginners might find it overwhelming due to its complexity.

Installation and Setup

- Download the App – Available from the official MySQL website or FileHorse.

- Install MySQL Server – Required for Workbench to function properly.

- Run the Installer – Select the appropriate version and configure MySQL connections.

- Connect to a Database – Use the MySQL Workbench connection manager to connect to a local or remote MySQL server.

- Start Using MySQL Workbench – Create schemas, execute queries, or manage databases.

Creating a New Database – Open MySQL Workbench, go to “Schemas” and click on “Create Schema.”

Writing SQL Queries – Use the SQL editor to write and execute queries.

Designing Database Models – Use the model editor to create ER diagrams and generate SQL scripts.

Performing Data Migration – Access the data migration wizard to transfer data from other database platforms.

Managing Users and Privileges – Navigate to the “Administration” tab to set user roles and permissions.

FAQ

Is MySQL Workbench free to use?

Yes, the Community Edition is free, but there are Commercial editions with additional features.

Can MySQL Workbench connect to remote databases?

Yes, it supports SSH tunneling and direct remote connections.

Does MySQL Workbench support other databases besides MySQL?

It is primarily designed for MySQL but supports migration from other database systems.

How do I optimize query performance in MySQL Workbench?

Use the Query Analyzer and Performance Schema tools to analyze and optimize queries.

Is MySQL Workbench available for macOS and Linux?

Yes, it is cross-platform and available for Windows, macOS, and Linux.

Alternatives

DBeaver – Open-source multi-database management tool with a modern interface.

HeidiSQL – Lightweight MySQL administration tool with a focus on speed and simplicity.

phpMyAdmin – Web-based MySQL management tool with an intuitive UI.

Navicat for MySQL – Premium database management tool with advanced features.

TablePlus – Modern GUI tool for multiple database engines including MySQL.

Pricing

MySQL Workbench Community Edition – Free

MySQL Workbench Commercial Edition – Part of MySQL Enterprise subscription (pricing varies)

System Requirements

- OS: Windows 11 or Windows 10 (64-bit)

- Processor: Intel or AMD 64-bit processor

- RAM: Minimum 4GB (8GB+ recommended)

- Storage: At least 200MB free disk space

- Graphics: DirectX 10 compatible graphics card

- Comprehensive set of tools for MySQL database management

- Supports database design, SQL development, and administration

- Free Community Edition available with essential features

- Built-in performance monitoring and optimization tools

- Data migration tools for transferring from other databases

- Can be resource-intensive for large databases

- Limited support for non-MySQL databases

- UI can be overwhelming due to feature complexity

- Some advanced features are locked behind a paid subscription

MySQL Workbench is a must-have tool for MySQL developers and administrators who need a robust and feature-rich database management environment. While it offers powerful tools for database design, SQL execution, and performance tuning, beginners may find its interface and functionality challenging at first.

Note: Requires .NET Framework.

Also Available: Download MySQL Workbench for Mac

What's new in this version:

Audit Log Notes:

- <COMMAND_CLASS> was not populated for <NAME>Execute</NAME>.

- For more information, see Logging Specific Event Classes

Compilation Notes:

- Group Replication: The OpenSSL Engine interface is deprecated, and is no longer being included in OpenSSL v3 main packages by some Linux distributions, including Fedora.

- To avoid build issues, the usage of the OpenSSL Engine interface by the Group Communication System (GCS) is now restricted to OpenSSL versions previous to 1.1

- Linux: Use /usr/bin/gcc (GCC 14.2.1) when building the server on Oracle Linux 10

- Upgraded the bundled Curl library to version 8.12.1

- Abseil could not be built on FreeBSD

- In order to use xxhash functions independently from the lz4 library (bundled or source), we compiled xxhash.c into our own binaries, which required using a great many CMake directives. Instead, we now build an interface library for xxhash, and link with that wherever such functions are used

- Use xxHash-0.8.2 from GitHub rather than the version bundled with lz4

SQL Function and Operator Notes:

- Important Change: When an SQL function is improved from one release to the next, it may throw SQL errors in situations in which it previously did not. If this happens in a table's constraints, default expressions, partitioning expressions, or virtual columns, the table could not be opened. This prevented both analyzing the problem (using, for example, SHOW CREATE TABLE) and addressing it (such as with an ALTER TABLE ... DROP ... statement).

- Now, on server upgrade, we scan the data dictionary for tables that use any of the features just mentioned. We then try to open such tables, and if we fail to do so, we alert the user. This patch addresses this. The --check-table-functions server option introduced in this release helps to address this problem by making it possible to specify the server's behavior when encountering an error with such a function. Set this option to WARN in order to log a warning for each table which the server could not open; setting it to ABORT also logs these warnings as WARN, but aborts the server upgrade if any issues were found.

- ABORT is the default; this enables the user to fix the issue using the older version of the server before upgrading to the new one. WARN flags the issues, but allows the user to continue in interactive mode while addressing the problem

INFORMATION_SCHEMA Notes:

- Fixed a performance issue in the PROCESSLIST table

Functionality Added or Changed:

- Important Change: For platforms on which OpenSSL libraries are bundled, the linked OpenSSL library for MySQL Server has been updated to version 3.0.16. For more information, see OpenSSL 3.0 Series Release Notes and OpenSSL Security Advisory (11th February 2025)

- Performance; Replication: The data structure used in tracking binary log transaction dependencies has been changed from Tree to ankerl::unordered_dense::map, which uses approximately 60% less space, and which should thus contribute to better dependency tracking performance

- InnoDB: To improve debugging, the buf_page_t and buf_block_t structure's metadata is now printed to the error log

- Increased the historical 1024-byte limit when printing the current query during signal handling to 1073741824 (1024 * 1024 * 1024)

Fixed:

- InnoDB: Fixed a potential memory leak in several places in the innobase code

- InnoDB: Under certain circumstances, MySQL could crash during shutdown due to pages which were still fixed or dirty. Errors similar to the following were logged:

- [ERROR] [MY-011908] [InnoDB] [FATAL] Page [page id: space=46, page number=75] still fixed or dirty

-[ERROR] [MY-013183] [InnoDB] Assertion failure: buf0buf.cc:5889:ib::fatal triggered thread 139963705668608

- (Bug #37391519)

- InnoDB: CHECK TABLE for spatial indexes did not verify the MBR against the geometry MBR stored in the clustered index record. This could result in incorrect behaviour of spatial indexes.

- As of this release, CHECK TABLE EXTENDED verifies the MBR matches the MBR stored in the clustered index record

- InnoDB: Fixed an issue relating to pessimistic row update.

- Our thanks to Mengchu Shi and the team at Alibaba for the contribution

- InnoDB: The CHECK TABLE operation could incorrectly report corruption in spatial indexes

- InnoDB: Fixed an issue relating to InnoDB redo log recovery

- InnoDB: Fixed an issue relating to reading index_id values

- InnoDB: Fixed an issue relating to lower_case_table_names

- InnoDB: Partition table indexes were not checked when retrieving a record count while that table's definition was being altered by another client session. The record count was executed without error.

- - As of this release, the index is checked to ensure it is usable when retrieving a record count

- InnoDB: Refactored code related to BPR_PCUR_* positioning for restore operations

- InnoDB: Changes made to innodb_spin_wait_delay in MySQL 8.0.30 negatively impacted performance

- InnoDB: Under certain circumstances, using ALTER TABLE with INPLACE to modify the size of a column could result in an index which exceeds the valid size limit (767 bytes). This occurred for tables with a row format of Redundant or Compact and the row format was not explicitly defined in the table creation.

- As of this release, a validation is performed and an error returned by any ALTER TABLE, INPLACE operation which will result in an invalid index size

- InnoDB: Fixed a memory leak in the Clone_persist_gtid thread.

- Our thanks to Baolin Huang and the team at Alibaba for the contribution

- Partitioning: When inserting NOW() into a column not part of the partition key of a partitioned table, all partitions were retrived, and no pruning occurred

- Replication: In a source-replica setup, the replica encountered irregular failures of UPDATE and DELETE statements with ER_KEY_NOT_FOUND errors on the same table. (The replica's binary log and GTID records showed that the row required was committed, and had not been deleted or updated.) This occurred on the replica when the row-matching algorithm used was HASH_SCAN and two rows in the same table had the same CRC32 value.

- In the event of such a CRC32 collision, finding a matching CRC32 in the hash table does not guarantee that the correct row is being updated, so the algorithm iterates over any multiple entries having the same CRC32, and compares the full record for each of them in a loop; the problem occurred due to the fact that the logic to exit this loop was incorrect. This logic has now been corrected

- Replication: The asynchronous_connection_failover_delete_source() function did not always perform as expected in all cases

- Replication: In some cases, the asynchronous_connection_failover_add_source() function did not perform as expected

- Replication: In some cases, MASTER_POS_WAIT() did not perform as expected

- Replication: The asynchronous_connection_failover_add_managed() function in some cases did not produce the expected result

- Replication: When the server was under a heavy write load, the binary log position for gtid_executed as shown in the Performance Schema log_status table did not match that of the gtid shown in the binary log file.

- We fix this by increasing the scope of the lock on the log_status table when querying it to ensure that transactions in the commit pipeline are completed. This ensures that a query against the log_status table waits until gtid_executed has been fully updated, thereby guaranteeing consistency with its position in the binary log

- Group Replication: When a secondary joined the group, it might happen that all group members started to grow the value of the column COUNT_TRANSACTIONS_ROWS_VALIDATING column of the Performance Schema replication_group_member_stats table indefinitely. This impacted memory consumption in all group members, eventually leading to thrashing if not mitigated by restarting the secondary group member that triggered the behavior, or in some cases, by restarting the whole group.

- Analysis pointed to issues with the Group Replication start operation, which checks whether there are partial transactions on the group_replication_applier channel from previous group participation; if any are found, this channel is stopped after applying all complete transactions and its relay logs purged, and then the channel is restarted. After this, distributed recovery is performed, applying any missing data from group members.

- The issues arose when the Group Replication pipeline operation for stopping the group_replication_applier channel incorrectly stopped a periodic task from the certifier module, which caused some periodic internal operations not to take place. One of these tasks was the periodic sending of the committed transactions; this omission prevented garbage collection for certification, which in turn caused a continuous increase in COUNT_TRANSACTIONS_ROWS_VALIDATING in the Performance Schema replication_group_member_stats table.

- To solve this problem, we have taken steps to ensure that the pipeline operation for stopping the group_replication_applier channel no longer interferes with the certifier module, which also stops spurious values from being added for COUNT_TRANSACTIONS_ROWS_VALIDATING

- Group Replication: When running Group Replication, some transactions may not have write sets, as with empty transactions with GTID_NEXT specified, or DDL statements. For such transactions, Group Replication cannot check conflicts; thus, it is not known whether they can be applied in parallel, and for this reason, Group Replication follows a pessimistic approach, and runs them sequentially, potentially leading to an impact on performance.

- While DDL must be applied sequentially, there is no actual reason to force such behavior for empty transactions, so this fix makes it possible for empty transactions to be applied concurrently with other nondependent transactions

- Group Replication: A group running group replication with a primary i1 and two secondaries i2 and i3 started to have intermittent issues because of high memory usage on the primary. The secondaries began reporting the primary as unreachable then reachable again, and the primary began reporting the secondaries as intermittently reachable then reachable as well. Following a period of such instability, the secondaries expelled the original primary (i1) and elected a new one (i2).

- Under these conditions, queries against the performance_schema.replication_group_members table on the former primary (i1) reported i1 as ONLINE and PRIMARY, i2 as ONLINE and SECONDARY, and i3 as ONLINE and SECONDARY for an extended period of time (12 hours or more) until the mysqld process was restarted on i1.

- The problems observed were found to have begun on the original primary (i1) when one of the secondaries was overloaded and began intermittently leaving and joining the group, its connections being dropped and recreated repeatedly on the primary server. During the reconnection process, the primary hung when trying to create the connection, thus blocking the single XCom thread. This was traced to the invocation of SSL_connect() on the XCom communication stack, which changed in MySQL 8.0.27 from asynchronous to synchronous form. When a node was overloaded, it might not respond to the SSL_connect() call, leaving the connecting end blocked indefinitely.

- To fix this, we now connect in a way that is non-blocking, and that returns in case of a timeout, leaving the retry attempts to the caller—in this specific case, the XCom thread when trying to reconnect to another node

- The fprintf_string() function in mysqldump did not use the actual quote character for string escaping

- EXPLAIN did not always handle subqueries correctly

- If a demangled function name exceeded 512 bytes in a stack trace, the function name was truncated and a newline was not printed.

- As of this release, long strings, such as filenames and demangled functions, are written directly to the output

- mysqldump did not escape certain special characters properly in its output. With this fix, mysqldump now follows the rules as described in String Literals

- Some operations on tables having functional indexes were not handled properly

- Attempting to install an unknown component using INSTALL COMPONENT was not always handled correctly

- The Audit Log plugin did not handle errors correctly when writing JSON output.

- See MySQL Enterprise Audit, for more information

- An UPDATE subsequent to an INSERT affecting a table which had a BEFORE INSERT trigger was sometimes rejected with a null value error when the INSERT had set a NOT NULL column to NULL, even though this should have been allowed by the server sql_mode in effect

- In some cases, components could not reuse the same connection for running multiple queries

- Improved error handling for stored routines

- Stored routines were not always invoked correctly in prepared statements

- Increased the size of SEL_ROOT::elements from uint16 to size_t

- Removed an issue with multibyte UTF8 handling

- An ORDER BY containing an aggregation was not always handled correctly

- An optimizer hint was ignored, unexpectedly requiring the use of FORCE INDEX, when querying a view that included a UNION. For more information, see Optimizer Hints

- Some subselects were not handled correctly

- An invalid DDL statement in certain cases was not always rejected as expected

- Improved the internal function append_identifier()

- Normally, a view with an unused window definition should be updatable, but when it contained a subquery, it was marked as not updatable. At update time, the window was eliminated, but this was too late to allow an update to be performed.

- We fix this by testing mergeability, by checking the presence of window functions, rather than that of window definitions; this allows the view to be updateable, and the problematic UPDATE to succeed

- In some cases, SET did not perform correctly in prepared statements

This fix addresses the following issues:

- Query_expression::is_set_operation() was not always executed properly

- Some sequences of DML statements could lead to an unplanned exit

- Some nested subselects were not always handled correctly

- On Debian, dh_strip_nondeterminism is no longer executed on zip and gzip files within the packages

- Removed an issue relating to invalid UTF8 values

- Addressed an issue relating to an invalid identifier

- A negative impact in performance was observed when using a multivalued index with ORDER BY DESC and LIMIT in a query, where the value specified by LIMIT was greater than the number of rows actually in the result

- When pushing a condition down to a derived table, we clone the condition, and, if the underlying field is a view reference (that is, a field from a merged derived table), we strip off the view reference and clone the expression that it references. When the underlying expression is a constant expression from a table that is on the inner side of an outer join, it cannot be treated as a normal constant because of the need to generate NULL values. When we stripped off the view reference, this information was lost, leading to wrong results.

- We fix this by avoiding condition pushdown for such cases

OperaOpera 120.0 Build 5543.61 (64-bit)

OperaOpera 120.0 Build 5543.61 (64-bit) SiyanoAVSiyanoAV 2.0

SiyanoAVSiyanoAV 2.0 PhotoshopAdobe Photoshop CC 2025 26.8.1 (64-bit)

PhotoshopAdobe Photoshop CC 2025 26.8.1 (64-bit) BlueStacksBlueStacks 10.42.86.1001

BlueStacksBlueStacks 10.42.86.1001 CapCutCapCut 6.6.0

CapCutCapCut 6.6.0 Premiere ProAdobe Premiere Pro CC 2025 25.3

Premiere ProAdobe Premiere Pro CC 2025 25.3 PC RepairPC Repair Tool 2025

PC RepairPC Repair Tool 2025 Hero WarsHero Wars - Online Action Game

Hero WarsHero Wars - Online Action Game SemrushSemrush - Keyword Research Tool

SemrushSemrush - Keyword Research Tool LockWiperiMyFone LockWiper (Android) 5.7.2

LockWiperiMyFone LockWiper (Android) 5.7.2

Comments and User Reviews